HyperDX & Mirascope Integration

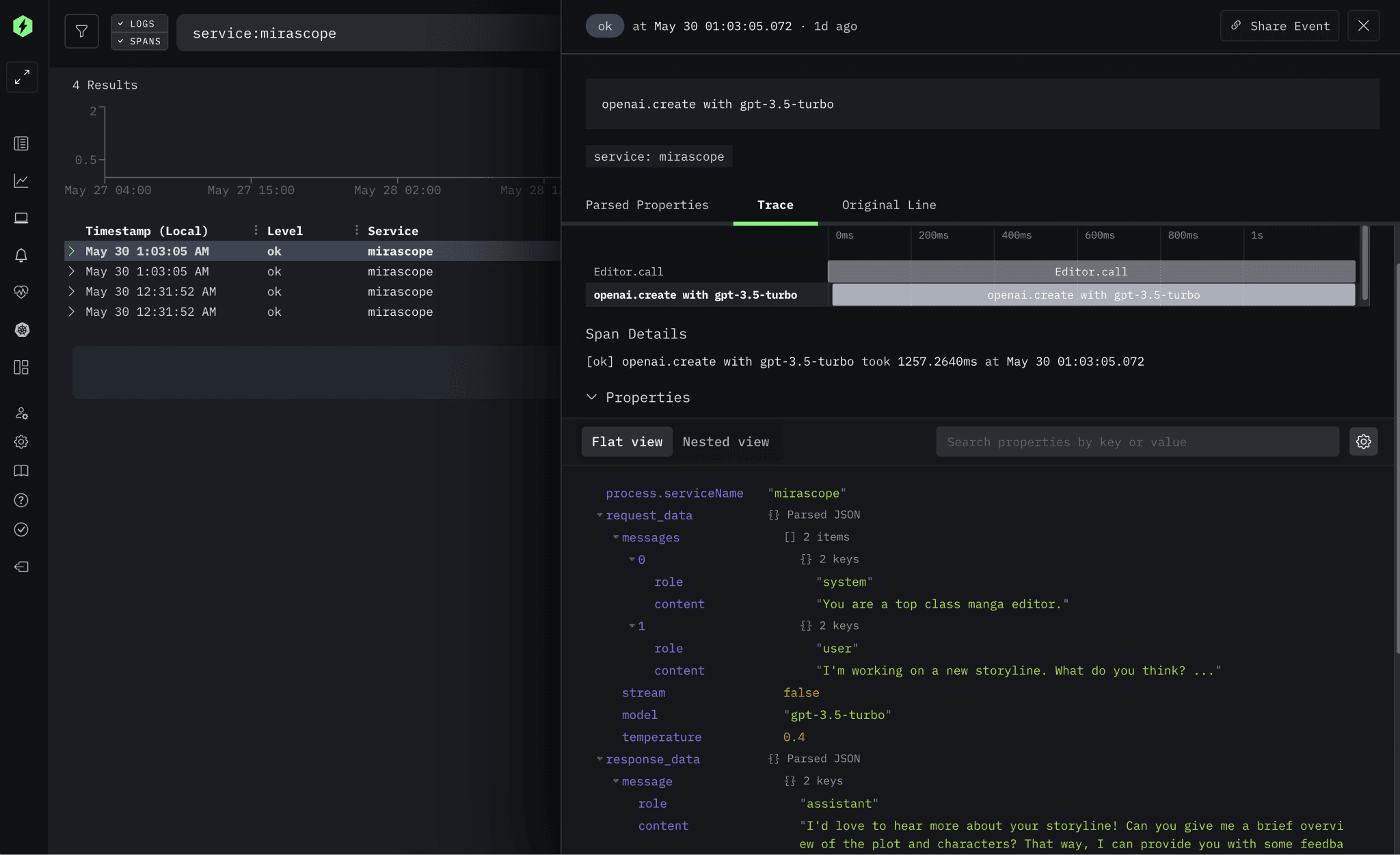

We are excited to share that HyperDX is now integrated with Mirascope (opens in a new tab), an open-source dev-friendly Python toolkit for building LLM-powered applications. This new integration allows developers to gain deeper insights into their LLM applications, making it easier to debug RAG pipelines, agents, and other LLM-based applications. With the ability to view prompt templates, conversations, token counts, and response times alongside your existing logs, traces, metrics, and session replays, you can now enjoy a unified observability experience in HyperDX on top of your Mirascope applications.

Why Mirascope?

Mirascope simplifies the development of LLM applications, making it feel like writing the Python code you are already familiar with. It abstracts away much of the complexity associated with working with LLMs, enabling you to focus on building robust, scalable applications. By integrating with HyperDX, Mirascope users can now gain deeper insights into their applications without the overhead and cost associated with traditional observability platforms like Datadog.

The Integration: What to Expect

With the new OpenTelemetry-based integration between HyperDX and Mirascope, users can now access detailed observability data for their LLM applications. Here's a breakdown of the information available in HyperDX via the integration:

-

Function Call Details:

- Function Name: Track which LLM function is being called.

- Model Name: Identify the specific model involved in the function call.

-

Request/Response Data:

- Input Arguments and Keyword Arguments: View the detailed input parameters for each function call.

- Model-Specific Data: Access specific information like prompt templates and model names.

- Serialized Request Data: Examine the request details in JSON format for easy parsing and analysis.

- Response Content: Get structured responses with role-based messages (e.g., assistant responses).

- Response Time: Monitor the time taken for the request to complete.

-

Token Count & Cost:

- Token Count: Monitor the number of prompt and completion tokens consumed by each function call.

- Cost Analysis: Understand the cost of each API call in dollar amounts.

All this data is automatically correlated with your existing logs, traces, and session replays in HyperDX, providing a comprehensive view of your LLM applications' performance and behavior to debug issues faster and optimize performance.

Getting Started

Integrating HyperDX with Mirascope is straightforward. Here's a basic example of how you can instrument your Mirascope application to send data to HyperDX:

# my_app.py

import os

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk.trace.export import (

BatchSpanProcessor,

)

from mirascope.openai import OpenAICall

from mirascope.otel import configure

from mirascope.otel.hyperdx import with_hyperdx

@with_hyperdx

class BookRecommender(OpenAICall):

prompt_template = "Please recommend a {genre} book."

genre: str

response = BookRecommender(genre="fantasy").call() # this will be logged to HyperDX

print(response.content)And then starting your application with the following environment variables:

HYPERDX_API_KEY=<YOUR_API_KEY> OPENAI_API_KEY=<YOUR_OPENAI_API_KEY> python my_app.pyYou can view the full documentation and setup guide on the Mirascope HyperDX Integration Page (opens in a new tab).